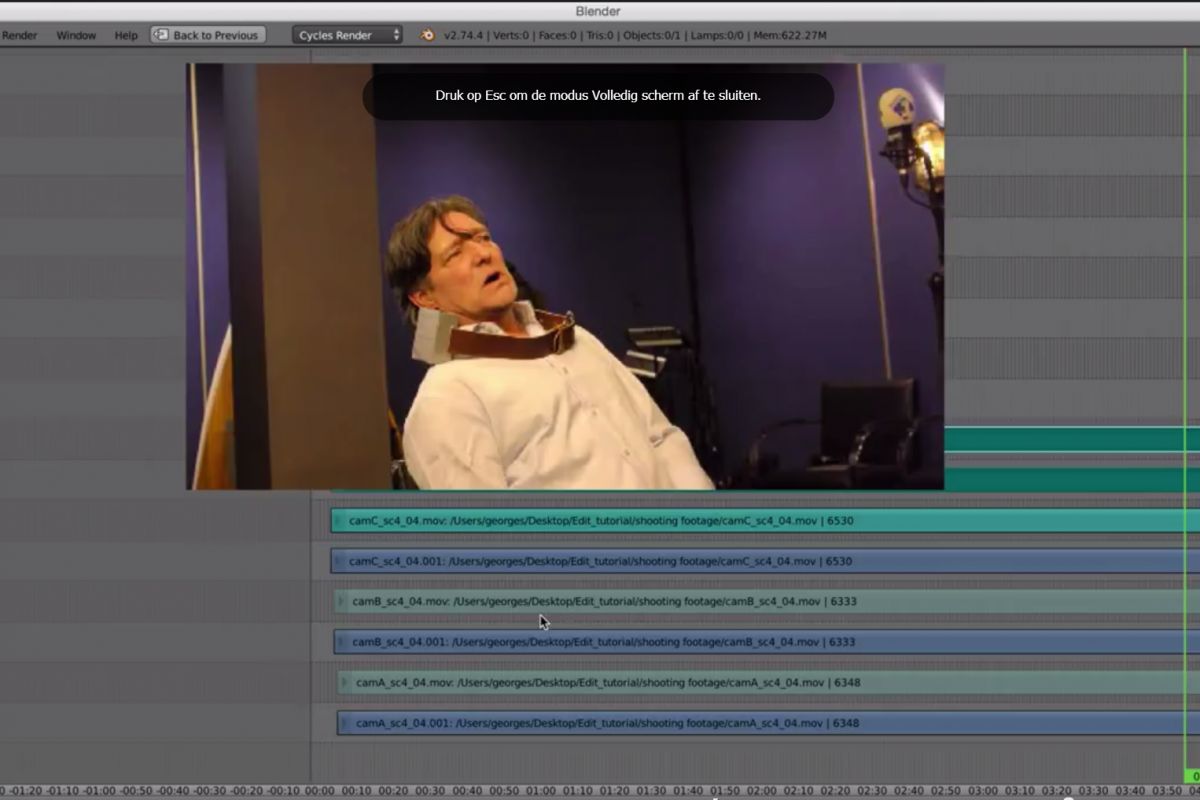

Here is the second part of the editing tutorial for Blender’s VSE, which I am using to edit Cosmos Laundromat. We’ve been using the VSE to edit footage we’ve shot with the actors and synchronize them with the audio recorded at the sound studio. The main difficulty has been Blender’s poor playback performance. It was struggling to play a smooth 24fps with H.264 videos and .wav audio files.

The basic problem was that Blender is loading the files into the cache before playing them smoothly. But when you edit live-action footage from a camera, you can have up to 10 hours of HD footage to review and edit. It doesn’t make any sense to load them into the cache (by playing them once, for example) before being able to play them. And on top of that, there is also a memory limitation; HD files are big files and loading them into the memory is problematic, and you can saturate (and “crash”) your RAM very fast…

So we worked hard (a special thanks to Anthonis!!) to make the proxies better to solve this issue. And here is how I worked on editing the actors!

Let me know if you have any questions, or if you would feel I’ve left anything out.

P.S. Yes, I wear the same T-shirt on purpose! ;)

Thanks for the video, but I’ve an issue : the sound is not working after 18:20

A part from that the proxy systems looks great !

Lazy question (as I can’t download and try that by myself right now -and because it might interest others) : assuming I’ve the raw footage on a server, if I’ve made the proxies local, can I go offline from the server from that point on ? Or will blender still try to connect (at openning or something) to that raw footage ?

Mathieu, your material has broken the sound :-(

Sorry for the audio problem I’ll correct it as soon as possible !

Nice improvements!

A few questions:

Once you had your first edit of the multicam footage, what was your workflow for getting the right bits of audio (and video?) into the layout files? Or am I making some wrong assumptions?

And how you handle the handover of the audio to the sound designer?

Stems from Blender? Original wav files + footage list? What’s the workflow?

Keep up the good work! Sheep on!

Hey J.

For editing the audio I did as I’ve shown in the tutorial, with scenes pre-edited with the footage I needed. Then for better trim/fade/cut in and out I copied the audio directly into the layout edit to fine tune later on when I was more sure about the takes.

And for the export to the sound designer, it’s an ongoing topic. For now I just export entire wav tracks, audio track per audio track… Since he’s going to use only the voices, it’s not that hard to do… But I’ll be investigating that in the coming weeks.

Great video!

From watching it, would these features help?

1) When you animated the volume, have the show waveforms show the original waveform in say 30% opacity ( same as the current black display ), and show the new animated ( and final ) volume using full black ( or colour from custom scheme of course ), but showing the correct output ( e.g. volume of 0 will show black waveform at the bottom, even though the original might be at half at that point, which would still be displayed by the 30% opacity behind the animated one.

2) Have the video clip background show image frames from that video .

Here’s an example…

http://photos2.appleinsidercdn.com/fcx120131.jpg

Would be cool to see someone mock up how this might look within the current Blender UI!

Hey Mal !

1-Sure, We were talking with Anthonis about audio fading tools and UI display. So it’s an ongoing thing.

2-Well it has been discussed but from my experience I never use the video thumbnail in timelines, so I didn’t push for it, but it’s a great thing to have so… (it’s cpu consuming and I’ve preferred focusing on playback performance…)

But for the audio it’s a very good idea !

Hmmm, I wonder if you converted all the audio to fcurve, could you read them and match curves to each other?

Auto audio sync.

There would surely be peaks that are similar throughout a file. It would give you an offset between fcurves which could then be allocated to the corresponding VSE strips in your timeline.

Hey David,

Yes in many NLE softwares you have some functions auto syncing the audio based on waveforms. But when you have some proper “clap” job done on set, it’s very esy and fast to do it manually…

But an add-on would help ;)

Hey man this is great news about the sequencer. So thankful for your input.

How kool would it be if you didn’t have to tick that little box for the other sequences to be available and if you could access them as a “background image” in the 3D view port as well.

No more rendering for boards – how kool would that be. Anyways great work your an inspiration.

Hey man did you know that FBX format from blender doesn’t support camera edits done in the Video (Movie) Sequence Editor to Mayas Camera sequencer (I’m sure that’s super complicated to program)

Anyways cheerers man; freakn great input your having. Keep on smashing it cause your history with other editors is really making blender so much better.

Thanks man

Thank you very much Adam !

I had no idea about the camera edit export problem. I’ll keep this in mind ! (and maybe push a developper on it if they can use his magic “quick fix” buttons ;))

Cheers !

+1 to show the sequencer preview as a “background image” in the 3Dview!

kudos and thank you all, gooseberry people!!!

Hey Mathieu,

Great Tutorial!

Will the “Use Sequence”-Hack be further developed?

What do you think if blender VSE would actually work like this:

http://s9.postimg.org/5zjx6q0e5/blenber_vse_mockup.png

It’s a quick UI-mockup I’ve done of how real (scene independent) nested video-sequence functionality could look like in Blender VSE. It’s inspired by nesting behaviour in Adobe Premiere Pro but would open up even more possibillities in blender like easy sequence relinking…

In my opinion it would bring Blender VSE to a whole new level.

What do you think about it? Could it be useful for project gooseberry?

Cheers!

Hey Anton,

In blender you can already ‘kind of do’ this with scenes. Scenes are sequences in your mockup. And relinking/replacing one scene by another is not that hard to do manually.

Thats interesting, but we also keep in mind here that Blender’s capabilites as an editing software are connected to do an animation film, so it’s power comes from the 3D capacities too. I think its more relevant to develop further in the intergration of editing tools into the production pipeline : connecting Asset manager to the edit, assigning task to artists through the edit or BAM or Attract, auto import of renders, exporting tasks or scenes to artist (automatically as soon as the edit is modified)…

And even this requieres a lot of development we might not have to time to do until the end of this project unfortunately.

That’s the whole problem in a production environment, time is a small ressource :)

Thankyou for re uploading the video. Couldn’t leave without pointing out that FCPX will let you edit the timeline while playing.

BTW why not use the Multicam feature in Blender? I would have thought that proxy resolution in some side preview windows would have been perfect for switching this?