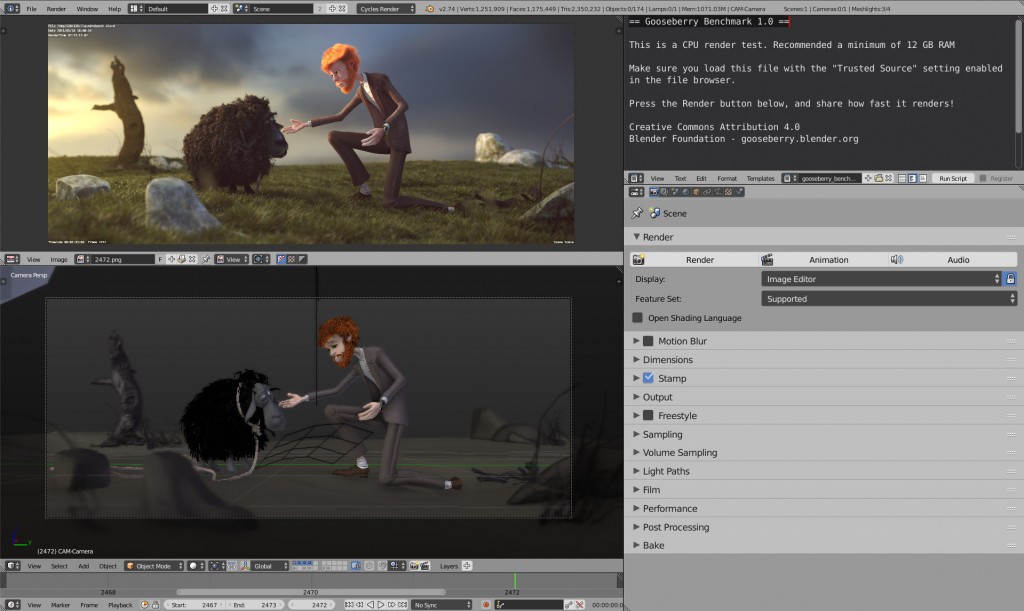

It’s about time to share a benchmark for production quality renders – something nice and heavy, taking 8-12 GB memory and requiring an hour to render in a minimal quality! Here it is (240 MB).

Note: you have load file “trusted” (allow scripts to run).

This is the 1.0 release of the file, we like to get feedback first and make some updates still. We also work on automatic registration of render stats via an add-on.

In the diagram below you can see how it renders here.

| Linux-3.16.0-4-amd64-x86_64-with-debian-8.0 | Intel(R) Xeon(R) CPU E5-2697 v3 @ 2.60GHz x56 | 1133 sec | 19 min |

| Darwin-13.4.0-x86_64-i386-64bit | Intel(R) Xeon(R) CPU E5-1680 v2 @ 3.00GHz x16 | 2916 sec | 49 min |

| Linux-3.16.0-4-amd64-x86_64-with-debian-jessie-sid | Intel(R) Xeon(R) CPU L5520 @ 2.27GHz x16 | 4965 sec | 83 min |

| Linux-3.16.0-4-amd64-x86_64-with-debian-jessie-sid | Intel(R) Core(TM) i7 CPU 930 @ 2.80GHz x8 | 7985 sec | 133 min |

(Yep. We have a 28 core intel doing 56 threads here, it’s amazing!)

Later more, enjoy!

-Ton-

Are these the final render settings in terms of samples and light bounces etc???

No the quality is intermediate still. We will make a small script to switch later.

Final quality will be more bounces and with motionblur.

Love that render so mech yet !

Just finished the computation. Are the stats already been sent to you?

Not yet, the automatic stat submission feature will be in a later update

Hi, is there a way to run this benchmark from the CLI in a manner that could be fully-automated from start-up to completion? With the results then being dumped to the output or some file?

Thanks,

Michael Larabel

That info will be added. This renders frame 1:

blender -b -y -f 1

Do you want the stats posted here ?

here goes:

OSX (hackintosh)10.10

CPU Intel i7-4771 Haswell @ 3.50 GHz x 8

8 GB Ram

Render time:78 min (1:17:53.92)

Peak Memory Consumption: 7828.38 Mb

Thanks, not a bad stat! The new i7s are fast.

ubuntu 14.04

i7 4930k 4.5ghz

current master (-march native)

48.06.72

:)

Intel(R) Xeon(R) CPU E5-2665 0 @ 2.40GHz

Linux 3.13.0-46-lowlatency #77-Ubuntu SMP PREEMPT Mon Mar 2 18:49:29 UTC 2015 x86_64 x86_64 x86_64 GNU/Linux

Peak: 7828.80M

Time: 32:08.32

sorry I forget… this is Dual Xeon setup (32 logical cores)

Intel(R) Core(TM) i7-4770 CPU @ 3.40GHz

Linux 3.16.0-4-amd64 #1 SMP Debian 3.16.7-ckt7-1 (2015-03-01) x86_64 GNU/Linux

Time: 01:21:46.45

(Kaito this one is for you)

Some Adaptive Sampler tests

On my laptop Windows 7 64bit; i7 2.3 Ghz, 8 core, 24GBmem.

Having Adaptive Sampler capped to max 65 % noise and update of 5, with adaptive distribution. This gives some noice, since AS caps till a certain noise treshold is reached, or stops at end of max samples whatever comes first

But since i don’t use loose-less video codecs, in final works this noise gets filtered out by compression video codec in a movie. (as a reminder youtube doesnt use looseless video codecs either) .

65 – 5; is what i use at work for “good” (not ultimate) quality at work;

Rendertime 1:05

>> https://dl.dropboxusercontent.com/u/54767531/AS65-5T24.png

35 – 5; is what i use for reasonable quality at work;

Render time 0:24

>> https://dl.dropboxusercontent.com/u/54767531/AS35-5-T24.png

Notice the look of the noise in AS, in the 35-5, it looks as real photographic noise, as if it was done using my Canon, it seams more ‘real’, (camera’s are less good in dark areas too), noise distribution, is more like a real camera.

The noise is in difference with other noise methods (like less samples), its a limit based upon a threshold of tile noise level. the result is that each tile doesnt get the same amount of render samples, and `thus that what gives the time win.

The jacket has noise, but might be reduced with i think a more simple shader

Although that noise as said earlier gives expression of fabric better then plastic CG. dough its a kind of taste thing too how one reacts to some noise.

I could test it with AS 80-5 too but for this scene with good global light there is no great benefit i think in using AS, it works better in other light/shader settings; but still its not bad, although a bit of trading between noise look, and noise free look.

Sheep hair is slightly different since this blender branch didnt have gooseberry hair tricks in it.

I like that noise pattern feels natural

And good render times!

Hi, here it’s 2h 09m 34.11s

Intel i7 4790k, 16 GB ram

Win 7 professional 64

Is the 2-hour timing for a single frame or for the whole sequence? Seems kinda slow if it’s for a single frame, given that’s a pretty beefey CPU.

Old HP DL585 server. 4x 3Ghz quadcore Opteron 8393

Ubuntu 14 x64 16 threads

54Min 1sec

Mac OSX 10.9.5

Xeon E5 @ 2.7GHz x 24

38:14.46

LinuxMint 17 OS

Linux 3.13.0-24-generic, Intel i7-2600k 3.4Ghz 8Core 32Gb 2 x GTX560Ti [GPU not used]

Render time: 1:47:47:14 Memory 6986.53Mb Peak Memory 7082.46Mb

Windows 7 x64 SP1

I7 2700K 3.5 GHZ

16 GB of DDR3 1600

Render Time 1:37:55.56

Ubuntu 14.04 Linux 3.13.0-48-generic

AMD FX-8350 4 GHz x8

16 GB of DDR3 1333

Render Time: 1:56:56.61

Peak Memory: 6981.79Mb

Windows 7 64 Bits

AMD FX-8320 4GHZ x8

16GB DDR3 1600

Render 02:18.89

Result from Alienware M17x laptop, running Linux Mint 17 OS

i7-4710MQ 2.5Ghz 8 Core 24Gb RAM GTX860M

Render Time: 1:29:29.75

Memory 7732.72M Peak 7828.65M

Intel Xeon CPU E5-2687W v3 @ 310Ghz (2 processors)

Windows 8 – 64 GB RAM

Time: 26:23:34

Blender build: 2015-03-03

Hash: 1965623

:D very nice render time!

Are you sure ut is a 310 GHz processor? Not a 3.10 GHz?

iMac 27″ Mid-2011 with 3.4GHz i7 and 12G system RAM: 1:50:30 peak memory 6891M

Model Name: iMac

Model Identifier: iMac12,2

Processor Name: Intel Core i7

Processor Speed: 3.4 GHz

Number of Processors: 1

Total Number of Cores: 4

L2 Cache (per Core): 256 KB

L3 Cache: 8 MB

Memory: 12 GB

Render Time: 03:19:06.87 | Mem: 6885,89 | Peak: 6981,82

Win7 64bit | i5-3350P 3.10 Ghz | 16 GB RAM|

Blender 2.73a | Hash: bbf09d9

MacBook Pro (Retina, 15″, Early 2013)

OS X Yosemite

Processor: 2.7 GHz Intel Core i7

RAM: 16 GB 1600 MHz DDR3

Render Time: 01:51:05.58 | Mem: 7732.38 | Peak: 7828.31

Blender 2.73a | Hash: 1f547c1

Intel Core i7-5820 [email protected]

Ubuntu 14.04 LTS -> Tiles 16×16 => 63min 25sec

Win7 -> Tiles 32×32 => 103min32sec

Win7 -> Tiles 16×16 => 98min21sec

Hi everybody,

am i right with that the file is setup for Blender 2.74? I opened in Blender 2.72, and Frank and Victor looking quite naked, because they do not have any Hair or Fur….

Kind regards,

Karl Andreas Groß

You are right, blender 2.74 have some improvements on the particle system! And new features in general that we want to test.

Dual E5-2660’s CPU’s @ 2.6 GHz (20 physical Cores + 20 virtual Cores)

Windows 7

32 Gig’s of Ram

Blender 2.73

Time: 42 min.33 sec.

Peak Ram: 6.9 GB

Intel® Xeon® Processor E5-2660

have 8 physical cores per processor, 16 HT 2,2GHz (max. turbo 3 GHz)

so finally 32 HT cores (and 16 physical) or you have different processor…?

Two E5 2660 v3 (10 core cpus). 10 physical cores each with HT. 40 cores total.

Intel Xeon E5-2660 v3 Haswell 2.6GHz 10 x 256KB L2 Cache 25MB L3 Cache LGA 2011-3 105W BX80644E52660V3 Server Processor

I have noticed that systems running Linux actually, for what ever reason, render faster than Windows machines. That is why you got a better time perhaps.

For me : i7 4771@3,5Ghz (Turbo Mode 3,9Ghz Max) 8threads 16 Go RAM Fedora 22 Alpha

Render time 1:19:06 :) Good screen :) and good job team ;).

What about a GPU render test? Will you be doing them too?

I have noticed that systems running Linux actually, for what ever reason, render faster than Windows machines.

Does this benchmark work in network render mode or is it for single system testing only?

Is designed for local render, but you are free to test your own setup! :)

Reason for asking is that I have an HP DL980 G7 box with 8 sockets of 16 HT cores each. For some reason, im not able to get blender to “run” under Windows or Linux correctly in native mode. However, I installed ESXi and created 16 VM’s, with 8 cores each (max for free version) and Blender does run in each VM. I ran some tests with other benchmarks, and it seems to run pretty good.

I would prefer to have a single blender instance run natively, but I don’t really know how to diagnose the problem. Blender starts, shows the main window then just exits.

And rendering from command line?

./blender -b /path/to/benchmark.blend -f 1

Windows 8.1 Pro 64 bit

Intel® Xeon® Processor E5-2650 v2 (20M Cache, 2.60 GHz) x2 (8×2 = 16 cores)

Render time: 36:53.84

Great work, waiting to see the movie :-)

I try to do gpu rendering, blender and gpu driver got crash for this render setting. I am using gtx titan black.

The file currently is not ready for GPU render :(

Will be possible to try on Titan X (with 12GB RAM) ?

Nobody with a supercomputer to beat our intels? I’m waiting for it! Some university maybe?

Not a supercomputer, but HP DL980 G7 does have 128 HT cores at 2GHz each…

Trying to find a way to run benchmark in native mode.

I’ve been running this benchmark on a 48-core machine (4x Xeon E7-8857 v2), which is part of the Dutch national compute cluster Lisa, see https://surfsara.nl/systems/lisa/description. I get around 40-50 minutes render time. Looking at some of the other results posted would expect it to be a bit faster.

This is with the prebuilt binaries of 2.73a and command-line rendering and I notice that after tracing all the tiles (“… Path Tracing Tile 1728/1728”) blender spends a very long time without printing anything, must be at least 10 minutes, before continuing with the final composite step. Top shows it’s only using a single core at that time, any clues what is going on there?

The E7-8857 is a 12 core (24 HT core) chip, so if you ran with HT on, you would have 96 HT cores at 3GHz (288GHz total). Have you tried it with HT on? I have a pair of Dell R610 boxes, and find that HT works very well with Blender on all the tests I have done. I get almost double the non-HT rendering speed.

I believe HT is disabled on most of our systems, on purpose. I don’t know the specific reason for that actually.

I’m not sure how it affects the time, but we test the file with Blender 2.74

Okay, compiled the latest git master and tried again. Now render time is down to 16:04.06, however… the image doesn’t match what I saw earlier. Specifically, the character pose is different and it looks like the final compositing step isn’t applied (the hands and face are definitely not as pink as the other renderings I did on other machines).

I render from the command-line with

$ blender -b benchmark.blend -y -o //rendered.png -t 48 -f 1

and I get the feeling not all parameters are applied (correctly). I.e. the output file is named rendered.png0001.png, instead of just rendered.png.

Also tested the 2.74RC2 binaries and render time is 16:27.80. Coloring is correct in this version, but pose again is not.

Weird…

The -y should be after the -b.

Blender command line is like an instruction sequence.

blender -b -y benchmark.blend -o //rendered.png -t 48 -f 1

Okay, I now see that what I thought should be the result (the rendering shown at the top of this topic) is actually the last frame in the animation, not the first :)

So, with 2.74rc2 (binaries) the rendering was correct. Just rerendered and at 16:24.40 slightly faster than the earlier attempt.

Can’t get correctly composited (I assume) rendering with git master, though, when using -b -y …blend …

Oh, and I tried to compile git master with the Intel compiler, which can usually squeesh a bit more performance out of an Intel CPU than GCC, but it fails to compile. If interested, I can retry to get the exact error.

I’m surprised to so many essentially supercomputers here, makes me think that the project files should be shared so people around the world can render individual frames or sequences and send it back to be compiled. Could almost beat the 17 million core computer pixar used on big hero 6. But maybe that would be more useful on the (hopefully) next project doing a full feature film.

Ok, ran the benchmark in network render node on a HP DL980 G7 box.

Had some issues and notes below:

1) Was not able to get Blender to work in either Windows or Linux when installed on the base HW (128 HT cores @ 2GHz). Didn’t have time to do any significant debugging, but I would have prefered to run all 128 HT cores on a single instance of Blenderr.

2) Was able to use ESXi to create 16 x 8-core VMs and got Blender running on each VM under Linux. Im using the free version of esxi, so was limited to 8 virtual cores per VM, thus I built a single VM and just copied it 15 times to get all 16 nodes.

3) When I rendered, the network render sent each of 7 frames to 7 VMs, meaning that less than 1/2 the box was being used. 9 of the VM’s were idle. I suppose that it would be nice to have the benchmark contain at least 16 frames, but it is what it is and I don’t expect admins to re-work test just for me.

4) Run-times of each frame varied from 2690s to 3620s, but all 7 frames were completed in 3620 seconds. The net processing power of the 7 VM’s was 112Ghz out of the 256Ghz available on the box. So, I should have been able to complete 16 frames in approx the same time as the 7 frames due to the idle cores.

Anyhow, the takeaway is that this box in it’s current configuration is available for rendering if anyone want’s to use it. If there is any interest, I could poke at it further and see if I can get Blender working in a single instance rather than 16 smaller instances, but I could use some help in tracking down logfiles and such to tell me what’s going on inside Blender and what issues it’s having.

Intel i7-3930k @ 3.20Ghz

Windows 8 – 64 GB RAM

Time: 1:09:41

Blender 2.74rc2 – Rendered just as the file was setup

Amazon EC2 virtual machine instance:

C4.8xlarge

36 virtual cores

60GB RAM

Ubuntu Linux 14.04 x64

Blender 2.74RC2

Time: 22 min 14 sek

Cores should be equvalent to 2.9 GHz Intel® Xeon® E5-2666 v3 cores, for c4.8xlarge with turbo boost it can speed up to 3.5 Ghz.

Did not render exactly as expected – Victor’s pose is wrong with legs strangely twisted. Have not found yet, why this happened. Any ideas?

I used James Yonan’s Brenda scripts by the way… Easy to use and faster than fiddling with virtual machine directly through telnet or remote desktop.

You have to load the file as a trusted source (a checkbox in the open dialog). Some of the drivers, I am assuming, use some scripting that Blender blocks on startup (helps hinder malicious activities people could exploit through Blender). Just make sure you open the file through the open dialog and check that box. Hope that helps.

Oh, I totally forgot that, thanks!

I wonder, if there is a way to modify this preference setting through command line? I must change it on the machine that I made te AMI from, but if possible, I’d like to avoid the hassle of setting up Win > Linux remote desktop.

Ok, got a re-run using the gooseberry blender. Single frame Time: 22:34.30.

HP DL980 G7

X6550 x8 @ 2.00GHz = 128HT cores (256GHz) on a single CentOS instance.

Nice time!!

Time – 45:20.84

Peak Mem – 7828.21

Windows 8.1 x64

Intel Core i7 5960X OC 4.2ghz

64gb RAM DDR4

Did a benchmark on my humble PC.

Intel i7-3820 (4-core) at 3.60 ghz

12 GB RAM DDR3

Time – 1:59:12.44 (1 Hour 59 Minutes 12.44 Seconds)

Peak Memory – 7828.75 M

Blender 2.74.2 (26.03 git)

Dual Intel(R) Core(TM)2 Extreme CPU X9775 @ 3.20GHz

Linux 3.16.0-4-amd64 #1 SMP Debian 3.16.7-ckt7-1 (2015-03-01) x86_64 GNU/Linux

01:34:22.13

Intel(R) Core(TM) i7-4770 CPU @ 3.40GHz

24 GB RAM DDR3

Darwin Kernel Version 13.4.0: RELEASE_X86_64 x86_64

Mac OS X 10.9.5 Hacintosh

Blender 2.73a

1st run: Uptime 40 days

Time: 1:53:00:81

Mem: 6885.84M

Peak: 6981.77M

2nd run: Uptime 0 days (just after reboot)

Time: 1:35:02:91

Intel(R) Core(TM) i7-4770 CPU @ 3.40GHz

24 GB RAM DDR3

Darwin Kernel Version 13.4.0: RELEASE_X86_64 x86_64

Mac OS X 10.9.5 Hacintosh

Blender 2.74rc4

Time: 1:30::10:20

Mem: 7732.38

Peak: 7828.31

Blender 2.74 does indeed improve render times.

Mac Pro 2008

dual Intel XEON 5400 series 3.2 GHz total 8 cores

8 Gb Ram DDR2

OSX 10.10.2

Blender 2.74rc4

Time: 1:42:54

Mem: 7733 Mb

Peak: 7829 Mb

I vote we rename this file “Computer Killer”

iMac 3.5 GHz Intel Core i7 2013

16 GB 1600 MHz DDr3

OSX 10.10.2

Blender 2.74rc2

Render Time: 01:19:08:30

Mem: 7732.3 MB

Peak: 7828.29 MB

Intel i7-4790k

Ubuntu 14.04

Linux 3.19.2-031902-generic

Blender 2.74+ (rev: ab2d05d) with -O3 -march=native (*1)

Render Time: 01:08:34:41

Peak: 7732.83 MB

Peak: 7828.76 MB

*1

-DCMAKE_C_FLAGS_RELWITHDEBINFO:STRING=”-O3 -march=native -g -DNDEBUG” -DCMAKE_CXX_FLAGS_RELWITHDEBINFO:STRING=”-O3 -march=native -g -DNDEBUG”

Intel (R) core i5- 4440 CPU @ 3.10 GHZ 8GB RAM

480 minutes

Correction 180 minutes

Correction 3 hours

correction 180 minutes

Intel i7- 4771 CPU @ 3,50GHz

RAM 16 GB

Win 7 -64bit

Frame: 2472

Render time: 01:45:36.25

Mem 7732.82M

Peak: 7828.75

Blender 2.74 Hash:000dfc0

Ubuntu 15.04

Intel Core i7-3770K (Ivy Bridge) @ 3.5GHz

8 threads (autodetected)

16GB RAM

no settings changed

1:27:42.56

Not that slow for an almost three years old machine. I would really like know how fast a GPU can handle this particle hell.

win 7 v64 bit

ram : 16go

i7 5960x 3.9Ghz

49:24

Dual Intel(R) Xeon(R) CPU X5670 @ 2.93GHz

Linux 3.16.0-4-amd64 #1 SMP Debian 3.16.7-ckt9-3 (2015-04-23) x86_64 GNU/Linux Blender 2.74

Time: 00:42:50.56

@EIBRIEL You said the file is currently not ready for GPU render. Which is true…I did some GPU render tests that crashed blender every time and sometimes the Nvidia driver as well.

The only reason is not GPU ready that I found is the use of SSS Shader (Sub-Surface Scattering) that’s used on Viktor’s skin and it’s currently experimental. Is this really the only show-stopper ? or am I missing something here…

Hi

I’ve tried on Quadro M6000 without success, will try in experimental mode with new build, but still I’m not sure that this is enought…

Greetings

Jakub

31 minutes 52 seconds on one of RenderStreet’s CPU servers. How are the settings for the final shot compared with this one?

AMD Phenom II X6 1100 (6 threads)

16GB RAM

Gentoo 3.18.12 x86_64

Blender 2.74 (Hash: 000dfc0) official binary

Frame: 2472

Peak: 7828.65M

Time: 02:06:29.82

Win 7

CPU Intel i7-3820 @ 3.60 GHz x 8

32 GB Ram

Render time: 2:01:22.09

Peak Memory Consumption: 7732.82 Mb

Thank you so much for this cool benchmark ;-)

Both rigs where fresh installed Windows 8.1 Enterprise

2x E5-2630v2 2.6GHz (12c/24t), 32GB Ram

Frame: 2472

Time: 48:57.48

Mem: 7732.82M

Peek: 7828.75M

i7 3930K 3.2GHz (6c/12t), 24GB Ram

Frame: 2472

Time: 1:19:36.86

Mem 6481.75M

Peak: 6577.68M

Intel(R) Xeon(R) CPU E5-2683 v3 @ 2.00GHz 14C/28T

Frame: 2472

Time: 33:29.10

Mem: 6481.68M

Peak: 6577.61M

Intel(R) Xeon(R) CPU E5-2630 v3 @ 2.4GHz 16C/32T

Frame: 2472

Time: 29:16.98

Mem: 6481.77M

Peak: 6577.70M

looks amazing, can’t wait to see the final movie

thanks

Time – 39:17

Peak Mem – 6481.74M

Windows 10 PRO x64

Intel Core i7 5960X OC 4.5ghz

64gb RAM DDR4

Time: 1:16:02.49

Mem Peak: 6577.60M

HP Z600 Workstation

Dual Xeon X5550 (4cores 8threads 2,67GHz 3,0GHz boost)

24GB DDR3 ECC RAM

Windows 7 Pro 64bit

Ubuntu 15.10 with the same hardware is at 01:13:17.85 with a peak of 6577.70M

Blender 2.75a

Frame: 2472

Time: 58:38.78

Mem: 6481.66M

Peak: 6577.60M

HP DL585 G6

4 Hex Opteron 8439 (24cores, 2.8 GHz)

128GB DDR2 ECC RAM

Windows Server 2008 R2

Beautiful render.

So, my grandson got a hot, new graphics card for his workstation (a system I built a couple years ago), an Nvidia 980ti (about $800). Recently, I got this old but great server from Potomac eSTORE for about half that. It’s about a 5-year old system, expensive and fast in its day. Had to add an extra circuit in the house to power it. Got a cheapie VGA monitor, mouse and keyboard for it. It all works fine.

I recently installed Windows Server 2008 R2 on it. Today, I finished getting all the firmware and OS updates. I got Blender software going on it and downloaded this great benchmark program to test it. The system actually used about 20 GB of RAM total while doing the job.

So, my grandson, confident that his new graphics card would smoke my server, also ran the render. But, here’s the issue. He has 8 GB RAM on his CPU and 6 GB RAM on his graphics card. First, his system froze as it thrashed on the benchmark, which wants 9 GB of RAM. Finally, it got to the point where it tried to load to the graphics card. The graphics card barfed and canceled the job. In his words … “It’s not fair!”

There is something to be said about older technology that, while not as glamorous, is still able to do jobs that can bring some new technology to its knees. We’re going to have another run of the server against his graphics card tomorrow. This will be an animation job of mine with a much smaller RAM footprint, so it will be a more fair test. His system will probably smoke mine. But, that’s okay. The old server has already proven it packs a hefty and effective punch.

Linux Mint 17.2 Cinnamon (2.6.11) 64bit

Xeon E7540 2.00ghz 6 core x 4 (48 cores HT) – DL580 G7

(Linux System monitor only show 32 active cpu cores whilst rendering , any one know why and how to enable all of them?)

Total Mem 128GB – 17GB in Use whilst Rendering

Result;

Frame 2472 | Time 33.57.19 | Mem 6481.68M, Peak 6577.61M

a forgiving update… server serviced with different Linux distribution and CPU’s

and update blender ver. 2.78c

Ubuntu Studio 16.10 – 64 bit

Xenon 4860 10 core x 4 (80 threads) 128GB DDR3 ECC RAM DL580 G7

Tiles 32×32

Frame 2472 | Time 20.08.66 | Mem: 6820.94M, Peak: 6916.87M

Tiles 16×16

Frame 2472 | Time 19.49.64 | Mem: 6820.94M, Peak: 6916.87M

Time: 50:35.29

Mem Peak: 6577.63M

Mac Pro 5,1 (Mid 1012)

Processors: 2 x 2.4 GHz 6-Core Intel Xeon E5645 (12 CORES – 24 threads)

32 GB DDR3 ECC RAM

OSX YOSEMITE 10,10,5

Blender 2,76

The file is broken for blender 2.75a.

Armature of victor is destroyed.

yup in 2.76 as well.

hmm first pass with dual 2680 v2 is 33.42

Time: 1:03

Time 1:12

Time 5+

HP Proliant DL580G5

Server 2012 R2

Intel(R) Xeon(R) CPU e7450 @2.4 Ghz 4 Processors ( 24 threads) 128 gig of ram.

I have 3 of these machines and one of the cpu trays renders slower then the others. I’ve been all over the bio’s and as of yet I’ve not figured out why. Of the two faster ones, one has some more going on in the background then the other so that accounts for that. But one machine seems to take like 5 times longer no matter what I do. I’ve been all though the bio’s and I’ve swapped drives and ram out. And so far it don’t seem to be an overheat problem on the CPU’s.

Time 35:37.93

Dual Xeon E5-2670 8core 16thread

16Gb memory

Windows 10

Blender 2.76b

Hmmm, OK I agree, Linux rocks

Time 28:34.17

Dual Xeon E5-2670 8core 16thread

16Gb memory

Linux Mint 17.3

Blender 2.76b

picture 2472

Blender 2.77a

Windows 10 Pro 64 bit

i7-6700k 4 GHz

16 GB

time: 1h 13min 2sec

Linux Ubunto Studio 15.10 64bit

i7-6700k 4 GHz

16 GB

time: 1h 1min 42sec

In Windows CPU-Usage for blender always have been 99 % because of a lot other things running in background.

I think this is the reason for the time-delta about which linux is faster .

By the way – the first MoviePart you can download via blendercloud is about 10 minutes, for e.g. 30 fps, which means i have to render 18k of pictures which means 18k of hours which means 750 days which means 2 years –

I will write down the exact time after finishing!

Blender 2.77a

Dual E5-2683 v4

Time: 14:55.19

Blender 2.75 a

Dual E5-2680 v2 2.8GHz

96GB ram

Time: 26:53:98

Oops I have to upgrade blender, cause Victor srmature was broken as staten earlier.

Blender 2.77a

Dual E5-2683 v4

(16×16 tiles, 128 threads)

Time: 14:24.57

Blender 2.77 dev build + macOS Sierra

Dual E5-2683 v4

(16×16 tiles, 128 threads)

Time: 13:42.16

Blender 2.78 RC1, Windows 10 Pro

Dual Xeon E5-2683 v4, 64GB RAM

Time: 24:17.07

Windows 10 is a poor choice for rendering, Ubuntu would probably shave ~10 minutes off that.

GPU Render Attempt

Dual GeForce 1080 GTX, 16GB Total Video RAM

Failed – CUDA out-of-memory at around ~14GB used

Was worth a shot. :p

Blender 2.78

Asus z9pa-d8 mobo

Dual Xeon E5-2667 v2

(16×16 tiles 32 Threads)

31:35.96

Mac Pro 2009

OSX Sierra

3,46 GHz 6-Core Intel Xeon W3690

32 GB RAM

Blender 2.77

32×32 Tiles

01:11:56

Ubuntu 14.04.1

2 x E5-2670 v0 (8c/16t x 2)

64GB RAM

00:28:05.51

Frame 2472

Blender 2.78a

Windows 7 Pro 64 bit

Xenon X5690 x2 @ 3.47GHz

32 GB

time: 44:40:66 Memory peak 6935.68

Frame 2472

Blender 2.78a

Windows 7 Pro 64 bit

Intel Xeon E3 1241 v3 @ 3.50GHz

32 GB

time: 01:39:35.78 Mem Peak: 6935.90M

Intel Xeon 5650 @ 2X ” Stock Speed”

24 Gb Ram

52:38:57

Windows 10 Pro.

Peak Mem 6935.90M

Intel Xeon CPU E5-2670W v0 @ 2.60Ghz (2 processors)

Windows 7 PRO – 96 GB RAM

————————-

Time: 35:39:69

————————-

Peak Mem 6935.90M

Frame 2472

——————–

Intel Xeon E52770 x2 @ 2.60GHz

96 Gb Ram

——————–

TIME: 35:39:69

——————–

Windows 7 Pro.

Peak Mem 6935.90M

Blender 2.78a

2nd bench (my 2nd pc)

Frame 2472

——————–

Intel Core i7-3770 Quad-Core Processor 3.4 GHz (overclocked at 4.2 GHz)

32 Gb Ram

——————–

TIME: 1 hour and 47:00:00

——————–

Windows 7 Pro. / Blender 2.78a

======================================

I have and idea…

At first I wanted to say that I’ve been a photographer/ videographer for many years now, but I am quite new to 3D animation, so feel free to correct me if I am wrong…

When I rendered for 2nd time with my backup pc, I notice how much waste of time/energy is to render something twice. Thinking of all those people (us) rendering the same frame made me think. So:

The idea is to have a community in which 3d animators can help each-other in rendering… For example:

– If there is project to be rendered, and there is a dead line for it, we could take a few frames each one of us, and render them at our machines, and upload them back to the creator… Same quality / samples etc… In that way projects like this beautiful animation could be rendered in just a few hours…

Even better, if there was an online program that would provide an easy way to contribute those frames to users, and have back the rendered png files in correct order to the creator…

What are your thoughts about this idea?

Thank you for reading…

This is not an active blog, the movie project ended 1.5 year ago. Check blender.org ‘support’ for better places.

This idea is called distributed computing or more specifically distributed rendering. It’s existed since the early days of the internet. Look into boinc. SETI@home was the first time I used it back in the 90s. Blender has a channel for it.

Did a few test with this old benchmark blend, wanted to see what my old cpu could do. 1x Intel Xeon E5 v4 2011-3 18 core 36 thread 32GB ram, windows 10, blender 2.78c.

2048×858 resolution, render time of 26:13 (benchmark unchanged, running render from command line)

7680×4320 resolution, render time of 2:20:44 (128 samples)

Got the Cosmos Laundromat movie on Bluray from the blender store ages back, so don’t need to render the film to watch it. But still had a little go at rendering a few seconds animation from the benchmark blend, I adjusted the samples down to 128 to try and speed the frame render rate up a little for my pc. Took between 18-20 min on average a frame, so under 16 hours approx to render 49 frames, to get just over 2 seconds of Victor and Franck running at 24fps 2048×858. Only had one problem with frame 2443 that crashed when running in the batch, rendered it again seperate.

blender -b -y benchmark.blend -o //PNG -t 36 -f 2425..2473

I successfully ran this benchmark with

GPU (3) 980ti texture limit 2048 + culling.

CPU G3258

8GB system ram

time:

57m55s

Previous time was at 32×32, not optimal for GPU.

Changed tile size to 384×384 & upgraded to 24GB no more swapping.

8m45s

https://i.imgur.com/qDnxrRc.png

I decreased my time substantially, and am at the top of the heap.

9m52s

same settings save 384×384 tile size (needed for GPU)

Dual XEON E5-2680v3

24 cores (48 threads)

Win 10 Pro 64bit

64GB RAM

Frame: 2472

RenderTime: 20:03:45 (1203.45 seconds)

Mem: 6683.33M, Peak: 6779.25M

Hello!

The sheep (Franck) shows up naked when the file is opened and cycle renders naked also…. there is a red circle on top of the eye in the outliner. I am not the most knowledgeable with blender but I have tried toggling on all the fur in the outliner to no avail. Render still shows none of the Sheep looking wool fur. What am I doing wrong?

I am running Blender 2.79 and also had the same results in 2.74

i7 – 7700k

EVGA 1080 Hybrid

Windows 10 Pro

Dual Xeon Silver 4110 2.2 ghz (3 turbo)

20 Cores

Quadro p 4000, 64 gb ram

Win – 10

Render Time – 27:28:59

I’d like to claim the first sub 5 minute time at 4:35.06 using one of the University of Waikato’s new Driving Sim boxes.

As you would expect there are a few tricks. Mainly using one of the new nightly builds with combined CPU+GPU compute. I set the tile size to 16×16 and the thread count to GPUs + CPU cores.

Machine is an overclocked i9-7980xe with three 1080ti’s. Win 10 .

I look forward to see what those 8 GPU servers out there spit out!

(And BTW, a truly great movie – incredible concept and immaculately rendered!)

Stock Threadripper 1950X on Windows 7Pro – 2x8GB DDR4 RAM@2166

Render time: 27:25.64

6700k with 32GB@3000

Vega Frontier Edition Liquid

Win10

Render Time: 22:02.50

AMD Ryzen 1700X 8 cores 16 threads @ 3.4GHz , 2x8GB DDR4 3000

AMD RX580

Windows 10 pro

Blender 2.79

Render time : 44:36.76

Windows 10 Pro x64 Build 1709

Intel i9-7980XE (18 Cores) @4.3ghz

32 GB of DDR4 3200mhz

GPU: ASUS ROG STRIX GTX 1080ti(x2)

Render Time 06:41.32 (Just under 7 minutes)

Windows 10 x64

AMD Threadripper 1950x (pstate overclock to 3.9GHz)

32GB DDR4-3200

GPU Quadro K1200

Render time: 20:37.60

Intel i7-8086K (6C/12T) @ 5.2GHz (1.31V)

32GB DDR4-3200

nVidia GTX1080Ti (not used)

36 min 10.63 seconds

This is a speedy li’l thing, this CPU. Haven’t got my Noctua cooler yet but I reckon this one will easily clock higher with a few more milli-volts!

Intel Xeon E5-1650 Sandy Bridge E (6C/12T) @ 4ghz

16Gb DDR3 1600

No GFX used

1hr 00 seconds

Not bad for an old girl!

Intel Xeon E5-1650 Sandy Bridge E (6C/12T) @ 4.0 Ghz

16 Gb DDR3 1600

No Gfx used

Windows 10 64bit, Blender 2.79b

AMD Threadripper 2950x (16C/32T), stock frequencies

32 GB DDR4 3200 CL14, G.Skill FlareX

Asrock Taichi x399 Motherboard

2x GTX 1080ti

Render time: 19 Minutes in CPU mode

Windows 10 64bit, Blender 2.79b

AMD Threadripper 2950x (16C/32T), stock frequencies

32 GB DDR4 3200 CL14, G.Skill FlareX

Asrock Taichi x399 Motherboard

2x GTX 1080ti

Render time: 9:30 Minutes in pure GPUmode

Windows 10 64-bit Blender 2.80 Alpha 2

Intel i7-7700K 32 GB DDR4 (4C/8T) stock settings

ASUS B250

8x GTX1070Ti

Total Render time: 7 minutes 12 seconds (GPU only)

note: image begins rendering on-screen at the 5 minute and 30 second mark.

Windows 10 64 bit, Fall Creator update Blender 2.79a

i7 4790K (4C/8T) 4.4Ghz

32gb DDR3 1600Mhz

Gigabyte Z97X Gaming 3

1 Hour 11 Minutes 9 Seconds in CPU mode

Blender 2.8 beta with GPU Compute

(set to share between stock 1080 (8GB version) and stock Threadripper 1950x)

64GB DDR4@3200 CL???

ASUS ROG Zenith Extreme

my own gentoo setup

Render time: 7:45.56

Blender 2.79.7

i7 7700k @ 4.8GHz

16GB RAM

AMD Radeon VII

Windows 10 x64

13:09 minutes

Blender 2.80 beta (compiled from source)

i7-5930K CPU @ 3.50GHz

32GB RAM

1 RTX 2080 Ti

Ubuntu 18.04

Cuda 10.0 driver

GPU + CPU rendering

18:56:13

A venerable Macpro 5.1 , OSX

2 x X5690 3,46

41’11”

64 Gb Ram

Max RAM usage: 13, 65 gb

Hi, HP620

2x E5-2660V2 (20 cores, 40 threads)

64 gb RAM

28′ 32″

ubuntu 19.04

i7 7700k

32gb RAM

RTX2080

Cuda 10.0

GPU rendering

Time:06:20.25

ubuntu 19.04

i7 7700k

32gb RAM

RTX2080

Cuda 10.0

Blender 2.80

GPU+CPU rendering

Time:06:09.55

Just tested this out on my updated PC,

AMD Ryzen 9 3900X

32GB RAM

GTX 1070

Blender 2.8

Time: 13:05 Minutes

Windows 10 running Coreprio

AMD 2990wx @ oc stable 3.5 ghz (32 cores)

2 x 1080ti

64GB RAM

Blender 2.81

Time: 4:26 Minutes

CPU+GPU render 16×16 tile size

My new Rig:

Ryzen 9 3900X all cores @ 4,375 GHz air cooled, max. 84 °C

64GB DDR4 3600

MSI GTX 2080 Super

Blender 2.81

Time for CPU-Render: 11:45.46